At CES 2026, discussions around AI computing infrastructure moved beyond single, more powerful GPUs. Instead, the focus was on a bigger question: what needs to change when AI moves from virtual models to real-world systems?

For many AI applications, especially those connected to physical environments, this question is no longer theoretical. It directly affects how systems are designed, deployed, and operated over time.

Topics like the Rubin platform, virtual AI training, physical AI, and autonomous driving were discussed together. The message was clear — AI computing is no longer only about faster hardware, but about how the whole system works.

When adding more compute is no longer enough

In the early days of AI inference, improving performance was relatively simple.

Models were smaller, workloads were stable, and adding more compute usually led to better results.

As AI systems expand into real-world use cases — such as automatización industrial, intelligent infrastructure, and edge deployments — this approach starts to break down. Compute power still matters, but it does not always translate directly into higher throughput or better system behavior.

Latency becomes harder to control, systems run continuously, and the cost of running inference grows over time. At this stage, the real issue is no longer how much compute you have, but how efficiently the system uses it.

Problems at scale are often system problems

In real deployments, performance issues often come from coordination rather than computation.

Data moves between CPUs, GPUs, and storage. Multiple nodes must stay in sync—network delays and scheduling overhead become visible.

These challenges are familiar in large cloud systems, but they become even more noticeable in distributed and edge-oriented environments, where resources are limited, and systems must remain stable for long periods.

Typical situations include:

1)GPU usage appears normal, but system performance stops improving

2)Increasing batch size does not help and may increase latency

3)Adding hardware increases complexity without reducing overall cost

These are signs that the system itself has become the bottleneck.

From Compute-Limited to System-Limited AI

| Aspect | Compute-Limited Stage | System-Limited Stage |

|---|---|---|

| Main bottleneck | Not enough compute | Waiting, coordination, data movement |

| Scaling effect | Throughput scales with more hardware | Diminishing returns |

| GPU utilization | Low or unstable | Looks high, but inefficient |

| Latency behavior | Average latency dominates | P95 / P99 grow quickly |

| Cost driver | Hardware performance and price | Idle time, power, operations |

| Optimization focus | Faster chips | Shorter data and scheduling paths |

Inference cost is no longer just about hardware

When systems reach this point, inference cost changes in nature.

It is no longer only about GPU price or raw performance. It is influenced by waiting time inside the system, inefficient scheduling, frequent data movement, and power consumption during continuous operation.

For industrial and edge AI systems, these factors are especially important. Systems often run 24/7, are deployed in large numbers, and must operate within strict power and maintenance limits.

From a practical perspective, the expensive part is often not the computation itself, but everything around it.

This is why AI computing infrastructure is increasingly treated as a system-level engineering problem rather than a hardware upgrade.

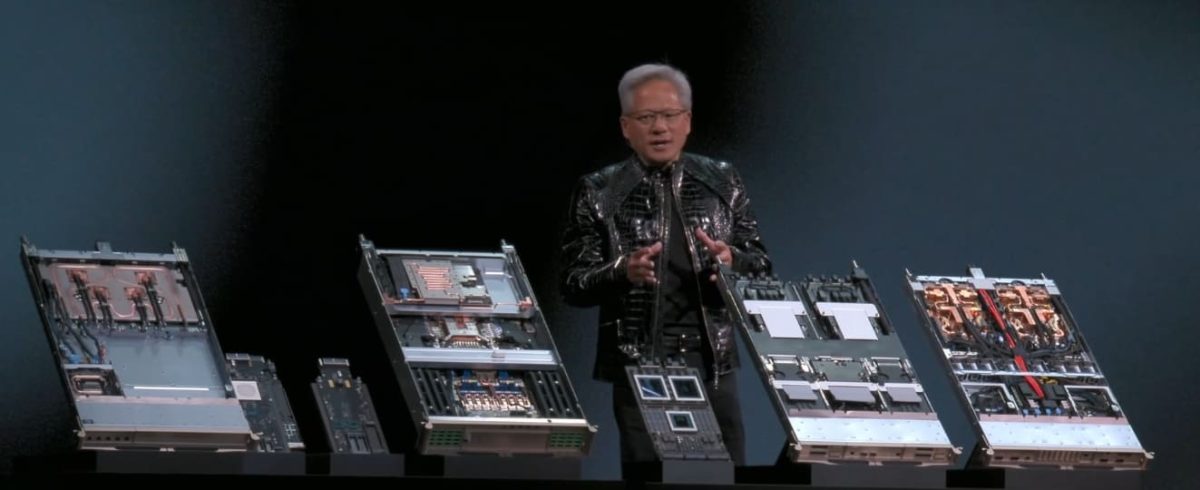

Why is Rubin positioned as a platform

This is why Rubin is described as a platform rather than just a chip.

The idea is to reduce system-level inefficiencies earlier, instead of fixing problems after they appear.

By designing hardware, interconnects, and software together, the goal is to ensure that more compute time is actually used for inference, rather than being lost to waiting, coordination, or data transfers. This type of system-level optimization is particularly relevant for large-scale inference and distributed deployments.

Virtual AI training exposes limits faster

Virtual AI training makes system issues more visible.

High-quality environments, physical simulations, and parallel workloads place heavy pressure on scheduling and data paths.

For physical systems — including robots, machines, and autonomous equipment — virtual training helps cover scenarios that are difficult or expensive to reproduce in the real world. At the same time, it creates demanding workloads that quickly expose inefficiencies in the computing infrastructure.

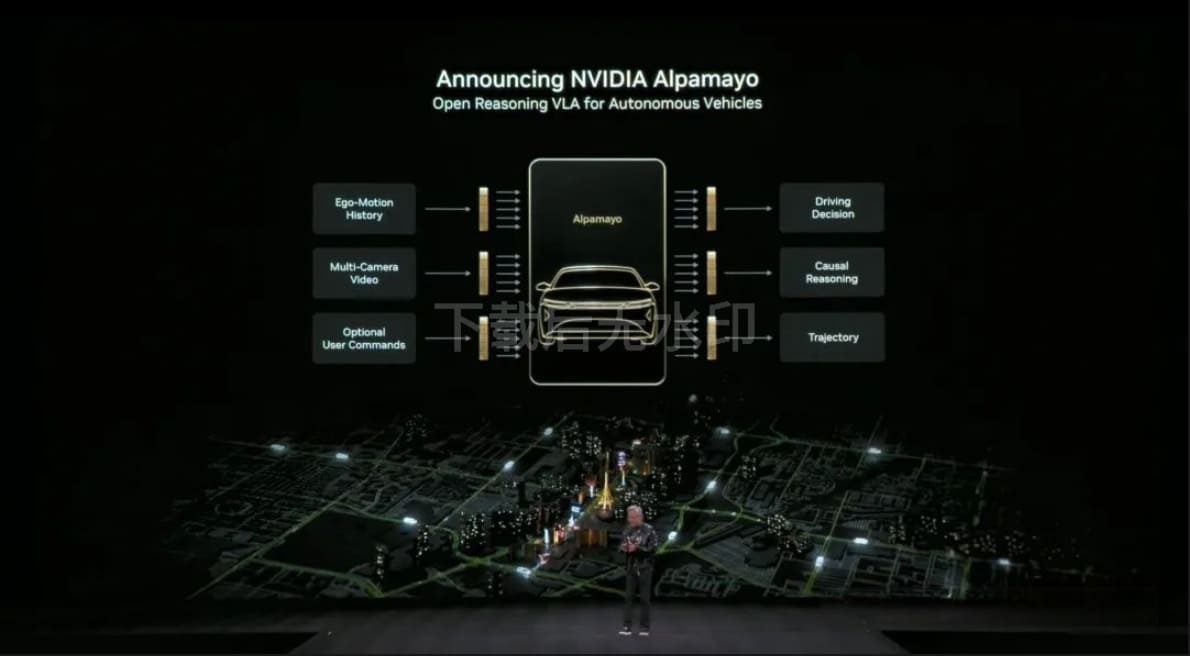

Autonomous driving pushes systems to the edge

Autonomous driving is one of the most demanding physical AI scenarios.

It combines multiple sensor inputs, constantly changing environments, and strict reliability requirements.

While automotive systems often run on specialized hardware, many of the same challenges also appear in industrial and edge AI applications: real-time processing, long-term stability, and tight control over power and system behavior.

A shift that matters for real-world AI

Looking at the overall message from CES 2026, one trend is becoming clear. AI computing is moving away from simply adding more compute and toward managing system complexity.

As AI continues to expand beyond data centers into factories, infrastructure, and edge environments, system efficiency becomes more important than peak performance. Reducing unnecessary overhead, simplifying data paths, and keeping systems stable over long periods will be key challenges.

This may not affect every deployment today. Smaller systems can still rely on traditional architectures. But for large-scale inference, physical AI, and industrial applications, system-level limits are already part of everyday engineering reality.

Disclaimer:

All images used in this article are sourced from NVIDIA’s official website and publicly released materials. If there are any copyright concerns or removal requests, please get in touch with us, and we will address them promptly. This article is intended for technical interpretation and information sharing only and does not constitute any form of commercial promotion or investment advice.